Permutation Invariant Neural Networks

Published at ICML 2019

Authors: Edward Wagstaff*, Fabian Fuchs*, Martin Engelcke*, Ingmar Posner, Michael Osborne (* indicating equal contribution)

Go to Blog Post

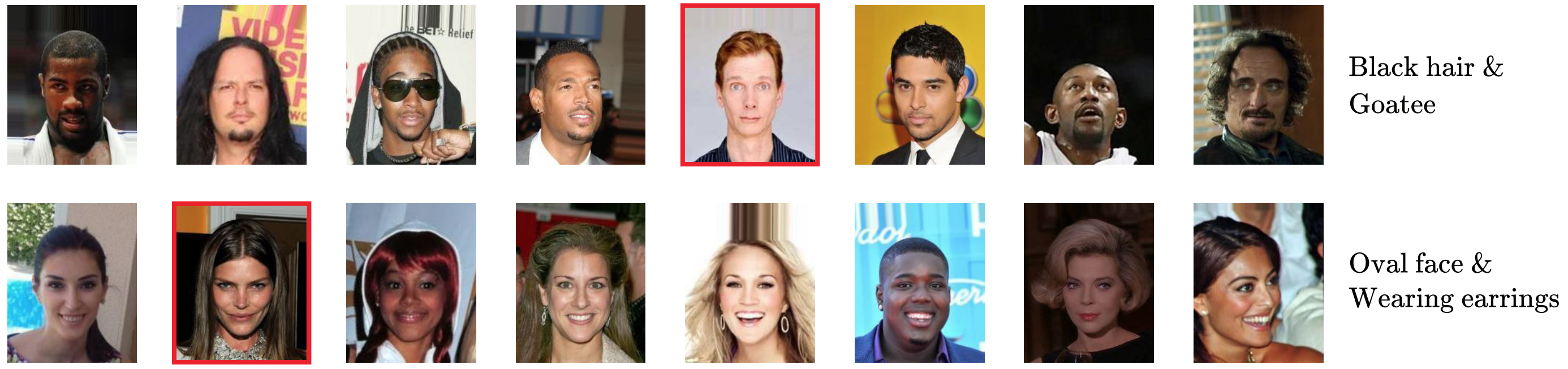

In this project, we looked at set-based problems. Sets are sequences of items, where the ordering of items carries no information for the task in hand. An example is anomaly detection. E.g., Lee et al. train a neural net on detecting outliers from a set of images as shown below:

Obviously, in this case, we do not want the model to care about the order of which these images are presented in. Hence, we want the model to permutation invariant with respect to its inputs.

A prominent permutation invariant neural network architecture is the Deep Sets architecture. It is obvious that all functions modeled by this architecture are permutation invariant. However, it is not so straight forward to find out whether all permutation invariant functions can be modeled via this architecture. This question is exactly what we addressed in our paper On the Limitations of Representing Functions on Sets.

We also wrote a blog post in collaboration with Ferenc Huszár (Twitter / Magic Pony) on this topic, which can be found here.