SE(3)-Transformers: 3D Roto-Translation Equivariant Attention Networks

NeurIPS 2020: join us at poster session 6, Thursday 5pm GMT

Authors: Fabian Fuchs*, Daniel E. Worrall*, Volker Fischer, Max Welling.

Credit: Daniel was sponsored by Konincklijke Philips NV. I conducted this project as part of an internship at BCAI. We would like to thank for their support and contribution to open research in publishing our paper.

In this project, we introduce the SE(3)-Transformer, a variant of the self-attention module for 3D point clouds, which is equivariant under continuous 3D roto-translations. Equivariance is important to ensure stable and predictable performance in the presence of nuisance transformations of the data input. A positive corollary of equivariance is increased weight-tying within the model, leading to fewer trainable parameters and thus decreased sample complexity (i.e. we need less training data). The SE(3)-Transformer leverages the benefits of self-attention to operate on large point clouds with varying number of points, while guaranteeing SE(3)-equivariance for robustness. We evaluate our model on a toy N-body particle simulation dataset, showcasing the robustness of the predictions under rotations of the input. We further achieve competitive performance on two real-world datasets, ScanObjectNN and QM9. In all cases, our model outperforms a strong, non-equivariant attention baseline and an equivariant model without attention.

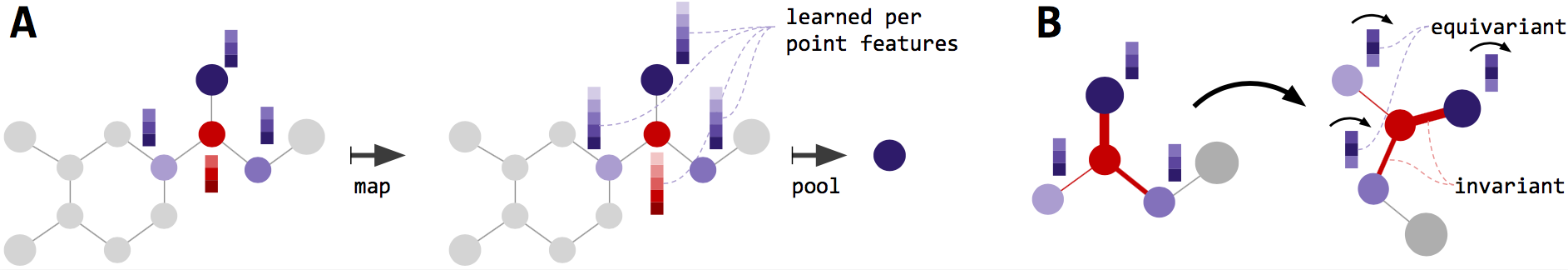

The figure above shows an SE(3)-Transformer applied to a molecular classification dataset. A) Each layer of the SE(3)-Transformer maps from a point cloud to a point cloud while guaranteeing euqivariance. For classification, this is followed by an invariant pooling layer and an MLP. B) In each layer, for each node, attention is performed. Here, the red node attends to its neighbours. Attention weights (indicated by line thickness) are invariant w.r.t. rotation of the input.

Code

- find the code here

Paper

- find the paper here